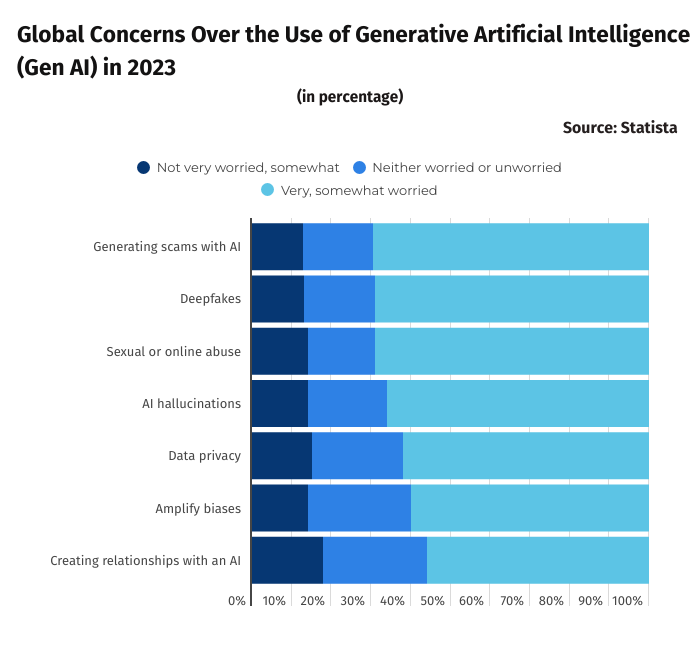

While Generative AI has proven invaluable, many consumers worry that these AI tools might scam them out of money or sensitive information. BanklessTimes.com reports that over 70% of people fear scams from Generative AI.

“Elizabeth Kerr, the site’s financial analyst, comments that cybercriminals are sadly manipulating Generative AI’s ability to generate highly accurate responses in seconds for their scam attacks, which raises concerns about its negative impacts.”

Global Concerns Over the Use of Gen AI

Marti DeLiema, an assistant professor at the University of Minnesota School of Social Work, raised her concerns about how generative AI could be the future of fraud schemes.

She added, “It feels like every day, I see a new story on the news about how criminals are using AI to deceive us.”

Now, it seems she is not the only one who fears these AI tools’ grave possibilities. Roughly 71% are worried they would be scammed with generative artificial intelligence, leaving 13% unconcerned and 18% neither worried nor unworried.

Deepfakes have raised many concerns, with 69% of people worried about their negative impacts, while only 13% remain unconcerned.

Moreover, sexual and online abuse over generative AI has sparked fear, with about 69% citing concerns, while AI hallucinations ranked fourth, making up 66% of worried individuals.

How has Generative AI Been Used in Scams?

Gen AI fuels scams in various ways. For instance, scammers use prompt engineering to manipulate ChatGPT into aiding their fraudulent schemes. Similarly, one prevalent scam involves a deepfake video of Elon Musk that deceives victims into buying crypto. Additionally, there are notable cases like the IRS scam and the Amazon scam, both employing generative AI to exploit unsuspecting individuals.

Chatbots increased email scams by over 135% between January and February. According to Darktrace, criminals used these chatbots for ‘sophisticated linguistic techniques,’ confusing the general public, who identify most email scams from their spelling and grammar mistakes.

Generative AI’s ability to correct errors and instantly churn out flawless text in multiple languages allows for the growth of hyper-personalized phishing emails.

While most AI tools have tried to put contingencies in place to prevent fraud prompts, cybercriminals have found ways to bypass them, with 80% of security leaders ‘ organizations falling victim to phishing emails written by generative AI.

Heightened concerns have emerged over synthetic identity fraud fueled by generative AI. Scammers increasingly use generative AI tools to extract sensitive information, such as social security numbers and dates of birth.

James Bruni, managing director of IDology, commented on the use of Gen AI in criminal activity: “Tapping into gen AI and technology advances, fraudsters can scale their operations and produce outputs that are remarkably convincing and, in many cases, indistinguishable from content created by humans.”